How Is Big Data Different From Data Structured In A Relational Database

What is big data?

Big data is a combination of structured, semistructured and unstructured data collected by organizations that tin can be mined for information and used in machine learning projects, predictive modeling and other advanced analytics applications.

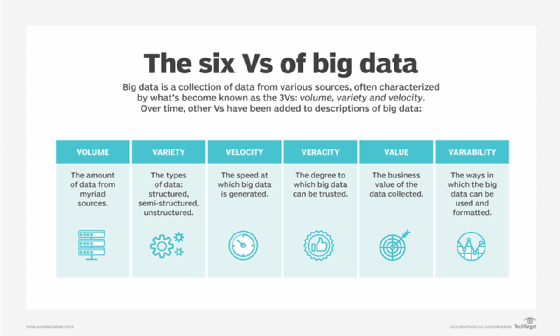

Systems that process and store big information have become a mutual component of data management architectures in organizations, combined with tools that support large data analytics uses. Big information is often characterized by the 3 Five's:

- the large volume of data in many environments;

- the broad multifariousness of data types ofttimes stored in big information systems; and

- the velocity at which much of the data is generated, collected and processed.

These characteristics were first identified in 2001 by Doug Laney, then an annotator at consulting firm Meta Group Inc.; Gartner further popularized them later it acquired Meta Group in 2005. More recently, several other V'southward accept been added to different descriptions of big information, including veracity, value and variability.

Although big data doesn't equate to any specific book of data, big data deployments oftentimes involve terabytes, petabytes and even exabytes of information created and collected over fourth dimension.

Why is big data important?

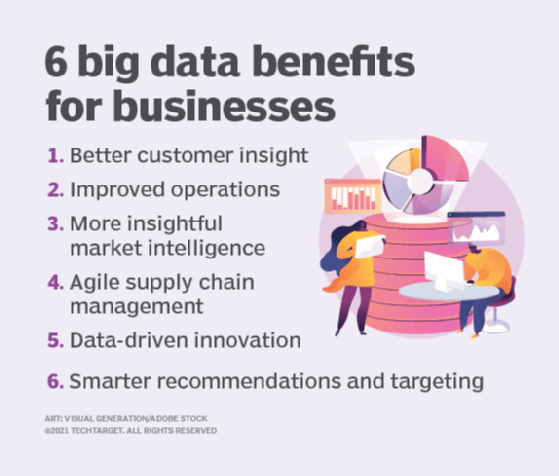

Companies use big data in their systems to ameliorate operations, provide better customer service, create personalized marketing campaigns and take other actions that, ultimately, can increment revenue and profits. Businesses that utilize it effectively hold a potential competitive reward over those that don't because they're able to make faster and more informed business decisions.

For example, big data provides valuable insights into customers that companies can use to refine their marketing, advertising and promotions in guild to increase client date and conversion rates. Both historical and real-fourth dimension data tin can exist analyzed to appraise the evolving preferences of consumers or corporate buyers, enabling businesses to go more responsive to customer wants and needs.

Big data is also used past medical researchers to identify affliction signs and risk factors and by doctors to assist diagnose illnesses and medical conditions in patients. In addition, a combination of data from electronic wellness records, social media sites, the web and other sources gives healthcare organizations and government agencies upward-to-date data on infectious disease threats or outbreaks.

Here are some more examples of how big information is used by organizations:

- In the free energy industry, big data helps oil and gas companies place potential drilling locations and monitor pipeline operations; likewise, utilities use it to track electric grids.

- Financial services firms use big data systems for risk management and existent-fourth dimension assay of market data.

- Manufacturers and transportation companies rely on big data to manage their supply bondage and optimize delivery routes.

- Other regime uses include emergency response, crime prevention and smart city initiatives.

What are examples of large data?

Big information comes from myriad sources -- some examples are transaction processing systems, customer databases, documents, emails, medical records, net clickstream logs, mobile apps and social networks. It also includes auto-generated information, such every bit network and server log files and information from sensors on manufacturing machines, industrial equipment and net of things devices.

In improver to data from internal systems, big data environments frequently incorporate external data on consumers, financial markets, weather and traffic weather, geographic information, scientific research and more. Images, videos and audio files are forms of big information, too, and many big data applications involve streaming data that is candy and collected on a continual basis.

Breaking down the Five's of big data

Volume is the most commonly cited characteristic of big data. A big information surround doesn't take to contain a large amount of data, simply about practice considering of the nature of the data being collected and stored in them. Clickstreams, organisation logs and stream processing systems are among the sources that typically produce massive volumes of data on an ongoing basis.

Big data also encompasses a broad variety of data types, including the following:

- structured data, such as transactions and financial records;

- unstructured data, such as text, documents and multimedia files; and

- semistructured data, such as web server logs and streaming information from sensors.

Various data types may demand to exist stored and managed together in big data systems. In improver, big data applications often include multiple data sets that may non be integrated upfront. For example, a big information analytics project may try to forecast sales of a product by correlating data on past sales, returns, online reviews and customer service calls.

Velocity refers to the speed at which data is generated and must be processed and analyzed. In many cases, sets of big data are updated on a real- or near-existent-time basis, instead of the daily, weekly or monthly updates made in many traditional data warehouses. Managing data velocity is too important as big data analysis further expands into auto learning and artificial intelligence (AI), where analytical processes automatically find patterns in data and use them to generate insights.

More characteristics of big data

Looking across the original three V'south, here are details on some of the other ones that are now often associated with big data:

- Veracity refers to the degree of accuracy in data sets and how trustworthy they are. Raw data nerveless from various sources can crusade data quality issues that may be difficult to pinpoint. If they aren't fixed through data cleansing processes, bad data leads to analysis errors that tin undermine the value of concern analytics initiatives. Data direction and analytics teams also need to ensure that they have enough accurate data available to produce valid results.

- Some information scientists and consultants also add together value to the listing of big data'southward characteristics. Not all the data that's collected has real business organisation value or benefits. As a effect, organizations need to confirm that data relates to relevant business issues before information technology's used in big data analytics projects.

- Variability also often applies to sets of large data, which may accept multiple meanings or exist formatted differently in split data sources -- factors that further complicate big information direction and analytics.

Some people ascribe even more than V's to big data; various lists accept been created with between seven and 10.

How is big data stored and processed?

Large data is oftentimes stored in a data lake. While data warehouses are commonly built on relational databases and comprise structured data but, data lakes can support various information types and typically are based on Hadoop clusters, cloud object storage services, NoSQL databases or other large information platforms.

Many big information environments combine multiple systems in a distributed architecture; for case, a central data lake might be integrated with other platforms, including relational databases or a data warehouse. The information in large data systems may be left in its raw class and then filtered and organized every bit needed for particular analytics uses. In other cases, it's preprocessed using data mining tools and data training software so it'due south gear up for applications that are run regularly.

Big data processing places heavy demands on the underlying compute infrastructure. The required calculating power oftentimes is provided by amassed systems that distribute processing workloads across hundreds or thousands of commodity servers, using technologies similar Hadoop and the Spark processing engine.

Getting that kind of processing capacity in a cost-effective mode is a challenge. As a effect, the cloud is a popular location for big data systems. Organizations can deploy their own cloud-based systems or use managed big-data-as-a-service offerings from cloud providers. Cloud users can calibration upwards the required number of servers just long enough to complete large data analytics projects. The business merely pays for the storage and compute fourth dimension it uses, and the cloud instances can exist turned off until they're needed again.

How big data analytics works

To go valid and relevant results from big data analytics applications, information scientists and other data analysts must have a detailed understanding of the available data and a sense of what they're looking for in it. That makes information preparation, which includes profiling, cleansing, validation and transformation of data sets, a crucial beginning step in the analytics process.

In one case the information has been gathered and prepared for analysis, various data scientific discipline and advanced analytics disciplines can be applied to run unlike applications, using tools that provide big data analytics features and capabilities. Those disciplines include machine learning and its deep learning offshoot, predictive modeling, data mining, statistical assay, streaming analytics, text mining and more than.

Using customer data as an example, the dissimilar branches of analytics that can be done with sets of large information include the following:

- Comparative assay. This examines customer behavior metrics and real-time customer engagement in order to compare a company's products, services and branding with those of its competitors.

- Social media listening . This analyzes what people are saying on social media nearly a business or product, which tin can help identify potential bug and target audiences for marketing campaigns.

- Marketing analytics . This provides information that can be used to better marketing campaigns and promotional offers for products, services and business initiatives.

- Sentiment assay. All of the information that's gathered on customers tin be analyzed to reveal how they feel almost a visitor or brand, customer satisfaction levels, potential issues and how client service could exist improved.

Big data direction technologies

Hadoop, an open source distributed processing framework released in 2006, initially was at the center of most big data architectures. The development of Spark and other processing engines pushed MapReduce, the engine built into Hadoop, more to the side. The outcome is an ecosystem of big data technologies that tin can be used for dissimilar applications but oftentimes are deployed together.

Big data platforms and managed services offered by IT vendors combine many of those technologies in a single package, primarily for use in the cloud. Currently, that includes these offerings, listed alphabetically:

- Amazon EMR (formerly Elastic MapReduce)

- Cloudera Information Platform

- Google Cloud Dataproc

- HPE Ezmeral Data Fabric (formerly MapR Data Platform)

- Microsoft Azure HDInsight

For organizations that desire to deploy big data systems themselves, either on premises or in the cloud, the technologies that are available to them in add-on to Hadoop and Spark include the following categories of tools:

- storage repositories, such every bit the Hadoop Distributed File System (HDFS) and cloud object storage services that include Amazon Simple Storage Service (S3), Google Cloud Storage and Azure Blob Storage;

- cluster direction frameworks, similar Kubernetes, Mesos and YARN, Hadoop's built-in resource director and job scheduler, which stands for Withal Some other Resource Negotiator but is commonly known past the acronym alone;

- stream processing engines, such every bit Flink, Hudi, Kafka, Samza, Storm and the Spark Streaming and Structured Streaming modules built into Spark;

- NoSQL databases that include Cassandra, Couchbase, CouchDB, HBase, MarkLogic Data Hub, MongoDB, Neo4j, Redis and various other technologies;

- data lake and data warehouse platforms, among them Amazon Redshift, Delta Lake, Google BigQuery, Kylin and Snowflake; and

- SQL query engines, like Drill, Hive, Impala, Presto and Trino.

Big data challenges

In connectedness with the processing capacity issues, designing a big information architecture is a common challenge for users. Big data systems must be tailored to an organisation's item needs, a DIY undertaking that requires IT and data management teams to piece together a customized set of technologies and tools. Deploying and managing big data systems likewise require new skills compared to the ones that database administrators and developers focused on relational software typically possess.

Both of those issues tin can be eased past using a managed cloud service, merely It managers need to go on a close centre on cloud usage to brand certain costs don't get out of hand. Also, migrating on-premises data sets and processing workloads to the cloud is often a complex process.

Other challenges in managing large data systems include making the data accessible to information scientists and analysts, specially in distributed environments that include a mix of different platforms and data stores. To help analysts find relevant data, data management and analytics teams are increasingly building information catalogs that contain metadata management and data lineage functions. The process of integrating sets of big data is often also complicated, specially when data variety and velocity are factors.

Keys to an effective big data strategy

In an organization, developing a large data strategy requires an understanding of business organization goals and the data that's currently available to apply, plus an cess of the need for additional data to aid see the objectives. The next steps to take include the following:

- prioritizing planned use cases and applications;

- identifying new systems and tools that are needed;

- creating a deployment roadmap; and

- evaluating internal skills to see if retraining or hiring are required.

To ensure that sets of big data are clean, consistent and used properly, a data governance program and associated data quality management processes likewise must be priorities. Other best practices for managing and analyzing big data include focusing on business needs for information over the available technologies and using data visualization to aid in information discovery and analysis.

Big information collection practices and regulations

Equally the collection and apply of big data have increased, so has the potential for information misuse. A public outcry about information breaches and other personal privacy violations led the European Union to approve the General Data Protection Regulation (GDPR), a data privacy law that took result in May 2018. GDPR limits the types of data that organizations can collect and requires opt-in consent from individuals or compliance with other specified reasons for collecting personal data. It as well includes a right-to-be-forgotten provision, which lets EU residents ask companies to delete their data.

While at that place aren't similar federal laws in the U.S., the California Consumer Privacy Act (CCPA) aims to give California residents more command over the collection and utilise of their personal information by companies that do business in the state. CCPA was signed into police in 2018 and took effect on Jan. 1, 2020.

To ensure that they comply with such laws, businesses demand to advisedly manage the process of collecting big data. Controls must be put in place to identify regulated data and preclude unauthorized employees from accessing it.

The human being side of big data management and analytics

Ultimately, the business value and benefits of large data initiatives depend on the workers tasked with managing and analyzing the data. Some big data tools enable less technical users to run predictive analytics applications or help businesses deploy a suitable infrastructure for big data projects, while minimizing the demand for hardware and distributed software know-how.

Big information can be contrasted with small data, a term that's sometimes used to depict data sets that tin can be easily used for self-service BI and analytics. A commonly quoted axiom is, "Big data is for machines; small-scale data is for people."

How Is Big Data Different From Data Structured In A Relational Database,

Source: https://www.techtarget.com/searchdatamanagement/definition/big-data

Posted by: hubertimas1991.blogspot.com

0 Response to "How Is Big Data Different From Data Structured In A Relational Database"

Post a Comment